I have recently started working with computer vision problems and one of the first problem i needed to solve was getting the 3D coordinates from a 2D image. I had absolutely no clue to where I should start and I ended up reading lots of theory behind image formation and simple models of camera called pinhole camera model.

In the pinhole camera model, you imagine a box with a small aperture through which light can enter and the image plane is inside the box. This model basically give a transformation equation from a 3D coordinate to 2D image plane coordinates with the focal length as a variable. In a real camera, lenses are used and hence there are more variables internal to the camera like lens distortion coefficients- both tangential and radial. These parameters are called the intrinsic parameters. Once these intrinsic parameters are known, the transformation between a 3D coordinate system and 2D system can be solved. And camera calibration is a method to do just that.

A good calibration software for Matlab is available HERE It also contain links to various other works on camera calibration.

To get the depth of the image, a single camera is not sufficient. 2 cameras can be used for calibration and that procedure is called stereo calibration. There is a library containing different types of algorithm for computer vision problems called OPENCV.

There are mainly two types of calibration process - photogrammetric calibration which is calibrating a camera by viewing an object with known size and location and is the commonly used method for almost all calibration methods. The second method is self-calibration which aims to calibrate a camera by moving it and has not been fully developed yet.

For photogrammetric calibration as the one in the link above, its easy to be confused with how the chessboard(calibration object) coordinates are taken. I thought at first that all the values of 3D coordinate of the object is known but this is not the case. Only the X and Y coordinate values are known as the chessboard has all patterns of same size. The third value ie the z cordinate is not known and is taken as constant. This is logical as the frame of reference is fixed on the board and hence we can alway assume z to be any constant. In the equation

x=MRTw

where x=image coordinate vector, M= camera matrix , R=rotational vectors ,T=translational vectors, w=object vector.

Its easy to wrongly assume that the translation and rotational vectors are somehow used to determine the depth of the object from the camera. The coordinates are taken as fixed on the chessboard. So you have x axis along the width and y axis along height or vice versa and finally the z axis perpendicular to the plane. During the calibration process the z value is always assumed to be constant. This is abit confusing as we are moving the chessboard around for each calibration image.

To understand this, imagine the chessboard as lying flat on a table and assume the coordinates as described above. Now you move the camera to get the same image as the ones taken before. Here, the rotation and translation of the camera is described by the rotation and translational vectors ie how the camera rotate and translate relative to the fixed frame of the chessboard and hence the depth of the camera from the board is not known at all. All we are doing is getting the XY values (since those values are known) so that we can determine the intrinsic parameters. The rotation and translation vectors have the same meaning when doing the calibration by moving the chessboard and keeping camera fixed.

Thursday, 27 August 2009

Thursday, 12 March 2009

Does a Genetic Algorithm Always Have To Be Population Based??

Genetic algorithm (GA) is a computational method of problem solving which draws inspirations from the mechanisms of evolution. It is a population based algorithm in which the population consist of potential solutions, called genotypes. The first step is the encoding process which simply creates a ‘template’ to represent a solution. Note that these potential solutions are not the real solution to the problem; they are just a random instance of a potential solution. A simple analogy for this process in computer programming would be to define what data type to be used and then randomly generating values of that data type. The algorithm will then ‘evolve’ the population over a number of generations using a fitness function which gives guidelines to evaluate the genotypes and thereby a way to produce better genotypes.

Recombination and mutation are the techniques which are used to evolve the solution of the algorithm. Both of these methods are modelled on their biological counterparts. Recombination involves taking two genotypes and creating a new genotype by combining these two genotypes in some fashion. Mutation involves altering the values of the genotype to any other values permitted by the encoding ‘template’. Over the generations, the older genotypes ‘dies’ off and are replaced by better genotypes. Hence, we get a population with higher average fitness value which will move towards the correct or optimal solution.

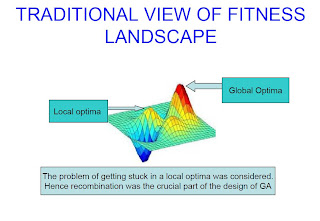

In the classical view of genetic algorithm, the evolutionary landscape was thought to be rugged as shown in the figure above and the algorithm was seen as performing a ‘hill climbing’ function on this landscape. In such scenario, there is a chance of not finding the global optima. Neutral networks are like ridges connecting two terrains with same fitness value. Thus, the search can jump from one terrain to another by mutation. If such networks exist abundantly throughout the landscape, the search will never get stuck in local optima and hence the evolutionary dynamics changes considerably. The term ‘neutral network’ has been conceived by Schuster while doing research on RNA evolution. Thus the landscape can now be viewed as layers of neutral networks connected by mutations where each neutral network has a unique fitness value.

In the classical view of genetic algorithm, the evolutionary landscape was thought to be rugged as shown in the figure above and the algorithm was seen as performing a ‘hill climbing’ function on this landscape. In such scenario, there is a chance of not finding the global optima. Neutral networks are like ridges connecting two terrains with same fitness value. Thus, the search can jump from one terrain to another by mutation. If such networks exist abundantly throughout the landscape, the search will never get stuck in local optima and hence the evolutionary dynamics changes considerably. The term ‘neutral network’ has been conceived by Schuster while doing research on RNA evolution. Thus the landscape can now be viewed as layers of neutral networks connected by mutations where each neutral network has a unique fitness value.In the presence of such networks, a new algorithm has been proposed which is very different from the traditional genetic algorithm. This algorithm doesn’t use recombination method and have a population of only one genotype. A mutated genotype is created and the fitness values of the mutated genotype and the original genotype are compared. If the fitness value of the mutated genotype is smaller than the original genotype, the original genotype is selected for further mutation; otherwise the mutated genotype is selected for further mutation. These steps are repeated a number of times till we get an optimal solution. And it has been shown that this algorithm performs much better than the population based GA which shows that a GA doesn’t need to be population based all the time, at least not when there are neutral networks.

Neutral Networks In Artificial Evolution

Neutral network basically mean a set of genotypes with the same fitness value. Consider the figure above which I've plagiarized from Inman's article in scholarpedia. The yellow, green and blue layers are the neutral networks and there is a single fitness value for each layer. The red bars represent the mutation which allows movement from one network to another.

Neutral network basically mean a set of genotypes with the same fitness value. Consider the figure above which I've plagiarized from Inman's article in scholarpedia. The yellow, green and blue layers are the neutral networks and there is a single fitness value for each layer. The red bars represent the mutation which allows movement from one network to another.So what is the significance of this??

The most important outcome of the existence of the above phenomenon is in the effect it has on the design of the genetic algorithm. Classical view of genetic algorithm has always neglected this phenomenon as a result of which genetic algorithm look the way they do today. In the presence of the neutrality, the design of genetic algorithm changes as pointed out by Lionel Barnett in the paper- Lionel Barnett (2000) Netcrawling - Optimal Evolutionary Search with Neutral Networks. This paper presents a new algorithm to deal with problems containing neutrality.

A good description of neutral network by Inman Harvey is available here. At the time of this post, this is the only proper description of neutral network available on the net.

Genetic Algorithm In A Nutshell

Genetic algorithm is a programming technique for problem solving by mimicking evolution. It has been used extensively in robotics and other applications where you would want to do away with hard coding certain procedures for obtaining solution and leave the program to decide the best solution on its own.

Given any problem to solve, potential solutions are encoded and fed into the program. These potential solutions are called genotypes and the set of all genotypes is called the population of the GA. This population is then 'evolved' using a fitness function and other techniques which changes the population to a new population with better average fitness value. One complete cycle which changes the old population to a new one is taken as one generation.

The 'evolution' is done by using a combination of two techniques which also draw inspiration from biology. The first is the recombination method which is the basically

recombining the genotypes to produce a new genotype. Mutation is the second method involved which is basically mutating the genotype to any possible value permitted by the encoding standard.

This procedure is repeated over many generations. So, in course of time, the GA will optimize its population to one containing comparatively larger number of better solution candidates.

As GA is basically a type of optimization method, it is not suitable for all types of problem. A good description of the types of problems suitable for genetic algorithm can be found here.

A nice general description of GA as well as an example of how a GA solve a problem plus codes in Delphi and java is available here

Given any problem to solve, potential solutions are encoded and fed into the program. These potential solutions are called genotypes and the set of all genotypes is called the population of the GA. This population is then 'evolved' using a fitness function and other techniques which changes the population to a new population with better average fitness value. One complete cycle which changes the old population to a new one is taken as one generation.

The 'evolution' is done by using a combination of two techniques which also draw inspiration from biology. The first is the recombination method which is the basically

recombining the genotypes to produce a new genotype. Mutation is the second method involved which is basically mutating the genotype to any possible value permitted by the encoding standard.

This procedure is repeated over many generations. So, in course of time, the GA will optimize its population to one containing comparatively larger number of better solution candidates.

As GA is basically a type of optimization method, it is not suitable for all types of problem. A good description of the types of problems suitable for genetic algorithm can be found here.

A nice general description of GA as well as an example of how a GA solve a problem plus codes in Delphi and java is available here

Adam And Eve Of Robotics

William Grey Walter (1910-1977) was a neurophysiologist and robotician who lived in Bristol, England. Though his name is mostly forgotten nowadays, he built what is arguably the first real demonstration of artificial life way back in 1949. Here's a clip of the autonomous robots he built in 1949.

He named these robots Elmer and Elsie. They are widely regarded by many as the Adam and Eve of robotics. Its interesting to see how modern robots have not shown much advances from these forerunners. These robots were often described as tortoises because of the shape of their design as well as their slow rates of movement. They were capable of phototaxis and returning to recharge themselves once they run low on battery power.Whats even more interesting is that these robots were built without any processors or other digital components which are so widespread and essential in robot building nowadays.

He named these robots Elmer and Elsie. They are widely regarded by many as the Adam and Eve of robotics. Its interesting to see how modern robots have not shown much advances from these forerunners. These robots were often described as tortoises because of the shape of their design as well as their slow rates of movement. They were capable of phototaxis and returning to recharge themselves once they run low on battery power.Whats even more interesting is that these robots were built without any processors or other digital components which are so widespread and essential in robot building nowadays.

The Most Advanced Quadruped Robot On Earth

The first time I saw this video, I thought it was a scene from a science fiction movie. But this is a real robot built by Boston Dynamics. It is called BigDog.

It is a quadruped robot which can run, walk, climb rough terrains and carry heavy loads. The design of the legs are particularly interesting as it seems to resemble a real animal. BigDog is powered by a gasoline engine that drives a hydraulic actuation system. BigDog has an on-board computer that controls locomotion, servos the legs and handles a wide variety of sensors. BigDog’s control system manages the dynamics of its behavior to keep it balanced, steer, navigate, and regulate energetics as conditions vary.

Here’s another video of a less impressive but equally interesting robot by Boston Dynamics.

It is a quadruped robot which can run, walk, climb rough terrains and carry heavy loads. The design of the legs are particularly interesting as it seems to resemble a real animal. BigDog is powered by a gasoline engine that drives a hydraulic actuation system. BigDog has an on-board computer that controls locomotion, servos the legs and handles a wide variety of sensors. BigDog’s control system manages the dynamics of its behavior to keep it balanced, steer, navigate, and regulate energetics as conditions vary.

Here’s another video of a less impressive but equally interesting robot by Boston Dynamics.

Tuesday, 10 March 2009

Episodic Memory System For Autonomous Agents

Episodic memory, a term coined by Endel Tulving, is basically the memory system which provides the ability to recollect past personal experiences. It is that part of the memory which allows you to integrate the ‘what’, ‘when’ and ‘where’ features of personal experiences which can be recollected later. The episodic memory system is different from the semantic memory system although they collaborated closely for various activities.

The idea of building robots with an episodic memory system is an interesting area of research and we'll look at two recent papers on the subject and some of the problems and challenges of building an episodic memory system. But first, let’s look at some of the steps involved in building any memory system.

The stages involved in building an episodic memory system can be broken down as follows:-

(1) Encoding- This phase will deal with how the incident is captured. There are different ways of encoding this captured incident in the internal memory. A good encoding scheme could allow for relational clues among the different incidents captured

(2) Storage- The storage phase is concerned with how the captured memories are maintained. In this phase, there is an interesting problem to address, the question of which captured memories to delete when there the storage is full. Humans also forget things as time elapsed and so the this phase of design has to allow some memories to be ‘forgotten’. However, an exponential decay of memories would be a bad design as there may be some memories which needs to be preserved and are crucial for many other things. As we’ll see later, most of the existing solutions to this problem has not been satisfactory.

(3) Retrieval and use. The retrieval phase is concerned with retrieval of stored memory and the use phase is concerned with what or how the retrieved memory is going to be used.

The first paper - Deutsch,T., Lang,R., Gruber,A.,Velik,R. (2008) Episodic memory for autonomous agents.

This paper discussed most of the existing models on the subject and highlighted the major problems associated with each model. The paper also mentioned ISAC, a model with an interesting view of the role of emotion in remembering and forgetting memories.

Perhaps, the most interesting part is the speculation on the possibility of transferring the memories between semantic and episodic memory systems, to facilitate learning in robots.

The second paper is -

Kuppuswamy,N,S., Cho,S,E., Kim,J,H. (2006) A Cognitive Control Architecture for an Artificial Creature using Episodic Memory.

This paper described the cognition system of a virtual creature called Ritty. The virtual creature is a virtual model of a dog so there are many behaviours which are similar to a real dog. Ritty is placed in a virtual environment and its task is to explore the environment. The simulation showed that Ritty was able to optimize certain tasks by using the episodic memory system.

One of the main problems, which all of the models have is the problem of ‘forgetting’ as mentioned earlier. The ISAC humanoid has an emotion triggered mechanism which is an interesting solution, but this still mechanism still rely on an exponential decay mechanism indirectly. One can argue that the decay mechanism is sufficient for simple robots that perform simple tasks but as the tasks to be performed become more complicated, a more sophisticated mechanism is required.

The idea of building robots with an episodic memory system is an interesting area of research and we'll look at two recent papers on the subject and some of the problems and challenges of building an episodic memory system. But first, let’s look at some of the steps involved in building any memory system.

The stages involved in building an episodic memory system can be broken down as follows:-

(1) Encoding- This phase will deal with how the incident is captured. There are different ways of encoding this captured incident in the internal memory. A good encoding scheme could allow for relational clues among the different incidents captured

(2) Storage- The storage phase is concerned with how the captured memories are maintained. In this phase, there is an interesting problem to address, the question of which captured memories to delete when there the storage is full. Humans also forget things as time elapsed and so the this phase of design has to allow some memories to be ‘forgotten’. However, an exponential decay of memories would be a bad design as there may be some memories which needs to be preserved and are crucial for many other things. As we’ll see later, most of the existing solutions to this problem has not been satisfactory.

(3) Retrieval and use. The retrieval phase is concerned with retrieval of stored memory and the use phase is concerned with what or how the retrieved memory is going to be used.

The first paper - Deutsch,T., Lang,R., Gruber,A.,Velik,R. (2008) Episodic memory for autonomous agents.

This paper discussed most of the existing models on the subject and highlighted the major problems associated with each model. The paper also mentioned ISAC, a model with an interesting view of the role of emotion in remembering and forgetting memories.

Perhaps, the most interesting part is the speculation on the possibility of transferring the memories between semantic and episodic memory systems, to facilitate learning in robots.

The second paper is -

Kuppuswamy,N,S., Cho,S,E., Kim,J,H. (2006) A Cognitive Control Architecture for an Artificial Creature using Episodic Memory.

This paper described the cognition system of a virtual creature called Ritty. The virtual creature is a virtual model of a dog so there are many behaviours which are similar to a real dog. Ritty is placed in a virtual environment and its task is to explore the environment. The simulation showed that Ritty was able to optimize certain tasks by using the episodic memory system.

One of the main problems, which all of the models have is the problem of ‘forgetting’ as mentioned earlier. The ISAC humanoid has an emotion triggered mechanism which is an interesting solution, but this still mechanism still rely on an exponential decay mechanism indirectly. One can argue that the decay mechanism is sufficient for simple robots that perform simple tasks but as the tasks to be performed become more complicated, a more sophisticated mechanism is required.

Subscribe to:

Posts (Atom)